Real-Time Data Processing Challenges in Live Betting Platforms

What is real-time data processing in live betting platforms?

Real-time data processing in live betting platforms refers to the immediate ingestion, analysis, and distribution of betting-related information with minimal latency. These systems must handle continuous streams of odds updates, match statistics, player actions, and market movements whilst maintaining response times under 100 milliseconds.

Live betting platforms process three primary data types: sports feed data from official providers, user interaction data including bet placements and account activities, and internal calculation data such as risk assessments and odds adjustments. Industry standards require sub-second processing for most betting markets, with critical events demanding response times under 50 milliseconds.

Why do live betting platforms face unique data processing challenges?

Live betting platforms experience data volumes that can exceed 100,000 events per second during major sporting events. This velocity creates bottlenecks in traditional processing systems designed for batch operations rather than continuous streams.

The platforms must simultaneously calculate odds for thousands of markets whilst processing bet placements from concurrent users. A single football match can generate over 500 distinct betting markets, each requiring constant recalculation based on match events and betting activity.

Integration complexity compounds these challenges. Platforms typically connect to 10-15 different sports data providers, each with unique data formats and delivery mechanisms. Payment processors, regulatory reporting systems, and fraud detection services add additional integration points that must maintain real-time performance standards.

What are the primary architectural patterns for live betting data processing?

Event-driven architecture forms the foundation of modern live betting platforms. This pattern treats each data point as an event that triggers specific processing workflows, enabling parallel processing and reducing system coupling.

Microservices architecture divides platform functionality into discrete services: odds calculation, bet processing, user management, and data ingestion. Each service scales independently based on demand, preventing bottlenecks in high-traffic scenarios.

Stream processing handles continuous data flows through technologies like Apache Kafka Streams or Apache Flink. This approach processes events as they arrive rather than batching them, reducing latency and improving user experience.

Message queuing systems using Apache Kafka or RabbitMQ decouple data producers from consumers. Publishers send events to queues without waiting for processing completion, whilst consumers process events at optimal rates.

How do latency requirements impact system design decisions?

Latency requirements dictate infrastructure placement and technology choices. Sports betting demands response times under 100 milliseconds for odds updates, whilst casino games require sub-20 millisecond responses for certain interactions.

Network optimisation strategies include deploying servers in multiple geographical regions to reduce physical distance between users and processing centres. Content delivery networks cache static content and route dynamic requests to nearest processing nodes.

Edge computing places processing capabilities closer to data sources and end users. Sports venues with on-site processing nodes can transmit data faster than traditional cloud-only architectures.

Database optimisation focuses on read performance through techniques like connection pooling, query caching, and strategic indexing. Write operations use asynchronous processing where consistency requirements allow.

What are the most effective data streaming technologies for live betting?

Apache Kafka dominates high-throughput messaging requirements in live betting platforms. Kafka handles millions of messages per second with configurable durability and ordering guarantees. Its partition-based architecture enables horizontal scaling and parallel processing.

Redis provides in-memory data processing for frequently accessed information like current odds, user sessions, and temporary calculations. Redis clusters can process over 1 million operations per second with sub-millisecond latency.

Apache Flink processes streaming data with exactly-once processing guarantees and low latency. Flink’s windowing capabilities enable complex event processing like calculating betting trends over time intervals.

WebSocket connections maintain persistent bidirectional communication between clients and servers. This eliminates HTTP request overhead for real-time updates, reducing bandwidth usage and improving response times.

How can platforms handle massive concurrent user loads during peak events?

Horizontal scaling distributes load across multiple server instances. Container orchestration platforms like Kubernetes automatically spawn additional processing nodes based on demand metrics.

Load balancing algorithms distribute incoming requests across available servers using techniques like round-robin, least connections, or weighted routing. Advanced load balancers consider server health and response times when routing decisions.

Auto-scaling policies monitor system metrics like CPU usage, memory consumption, and queue depths. Platforms configure scaling triggers to add capacity before performance degradation occurs, typically scaling at 70-80% resource utilisation.

Traffic shaping and rate limiting protect systems from abuse and ensure fair resource allocation. These techniques limit requests per user and implement queuing for non-critical operations during peak periods.

What database optimisation techniques are essential for live betting systems?

In-memory databases like Redis or Hazelcast store frequently accessed data in RAM for microsecond access times. These systems handle user sessions, current odds, and temporary calculations that require immediate availability.

Data partitioning distributes information across multiple database instances based on logical boundaries like geographical regions or sport types. Sharding strategies ensure even load distribution and enable independent scaling of database segments.

Caching layers reduce database load by storing computed results and frequently accessed data in fast-access storage. Multi-level caching uses L1 (application cache), L2 (distributed cache), and L3 (database cache) to optimise different access patterns.

Read replicas distribute query load across multiple database copies whilst maintaining a single write master. Asynchronous replication ensures read replicas stay current without impacting write performance.

How do you ensure data consistency across distributed live betting systems?

Eventual consistency models allow temporary data discrepancies across system nodes whilst guaranteeing convergence within defined time windows. This approach prioritises availability over immediate consistency for non-critical operations.

Distributed transaction management using two-phase commit protocols ensures atomic operations across multiple services. These mechanisms guarantee that complex operations like bet placement and account debiting complete successfully or roll back entirely.

Conflict resolution strategies handle simultaneous data modifications through techniques like last-writer-wins, vector clocks, or application-specific merge functions. These approaches maintain data integrity when network partitions or timing issues occur.

Data synchronisation protocols ensure distributed nodes maintain consistent state through techniques like gossip protocols, leader election, or consensus algorithms like Raft.

What monitoring and alerting strategies are critical for live betting platforms?

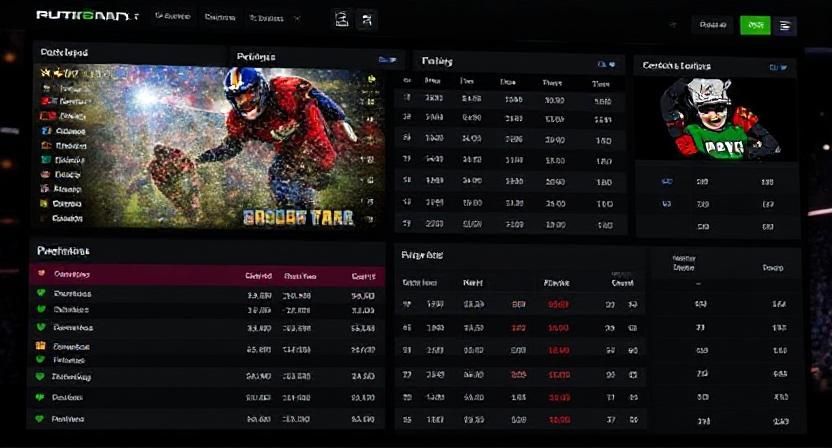

Real-time performance metrics track response times, throughput, error rates, and resource utilisation across all system components. Dashboards display key performance indicators including bet processing rates, odds update frequencies, and user concurrent connections.

Automated alerting triggers notifications when metrics exceed predefined thresholds. Critical alerts for system outages or performance degradation require immediate response, whilst capacity warnings provide advance notice of scaling requirements.

Capacity planning uses historical data and predictive models to forecast resource requirements. Platforms analyse traffic patterns around major sporting events to pre-scale infrastructure and avoid performance issues.

Distributed tracing follows requests across multiple services to identify performance bottlenecks and failure points. This visibility enables rapid diagnosis of issues in complex microservices architectures.

How can platforms optimise for different types of live betting scenarios?

In-play sports betting requires processing match events and updating hundreds of market odds within seconds. These systems prioritise throughput and use batch processing for non-critical updates to maintain performance during high-activity periods.

Live casino games demand ultra-low latency for user interactions with response times under 20 milliseconds. Optimisations include dedicated processing threads, pre-computed game states, and direct memory access for critical operations.

Esports betting presents unique challenges with rapid game state changes and younger, more technically demanding users. Platforms implement specialised parsers for game APIs and maintain dedicated infrastructure for esports events.

Multi-market betting complexity requires sophisticated correlation analysis to manage risk across related markets. Systems calculate cross-market dependencies and adjust odds dynamically to maintain balanced exposure.

What are the emerging technologies shaping the future of live betting data processing?

Machine learning algorithms predict traffic patterns and automatically adjust infrastructure scaling based on event schedules, historical data, and real-time indicators. These systems reduce operational overhead and improve performance consistency.

5G network deployment reduces latency between mobile devices and processing centres, enabling more responsive mobile betting experiences. Lower latency enables new betting products like micro-betting on individual game moments.

Edge computing adoption places processing closer to users and data sources. Sports venues with local processing nodes can offer enhanced betting products with reduced latency and improved data quality.

Quantum computing research explores applications in risk calculation, fraud detection, and odds optimisation. Whilst practical implementations remain distant, quantum algorithms may eventually solve complex optimisation problems currently requiring approximation methods.